Human Centric AI

Engineering alignment for a safer tomorrow.

The Aligned Sovereign Intelligence Core (ASIC)

We engineer alignment for a safer tomorrow via policy and ops tools that complement neural net R&D. ASIC is an MIT open-topology that pioneers alignment engineering throughout the AI stack.

Overview

ASIC uses global standards found in major institutions around the world for alignment reporting via ISO/IEC 42001, NIST AI Risk Management Framework, EU AI Act, and more via our alignment mapping:

This video explains the AI alignment challenge how ASIC helps us solve it,

AI Alignment

Objective

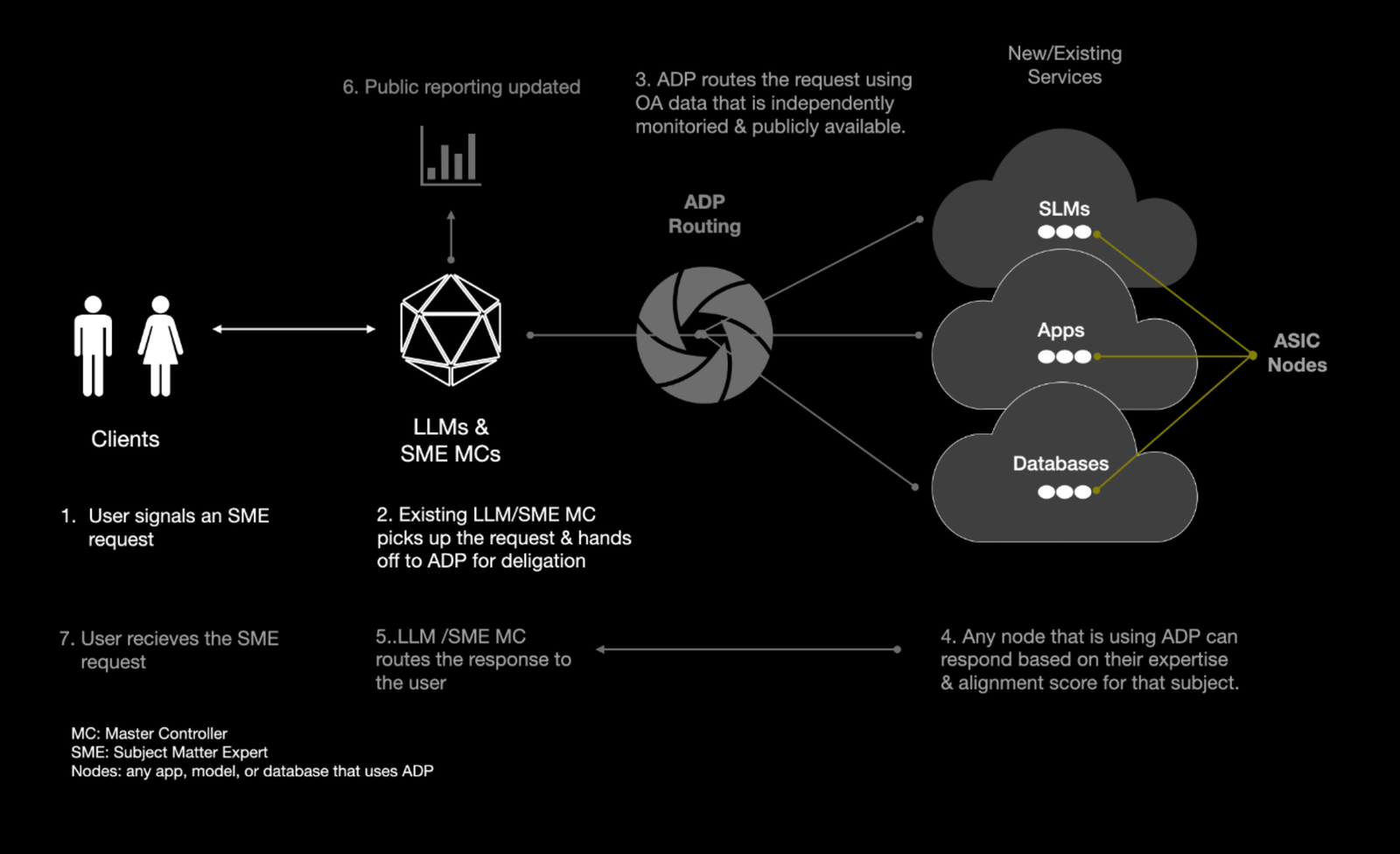

Our objective is to advance AI alignment via top-down transparency, ensuring pro-human AI collaboration. See the Statement on AI Risk here. View Alignment Delegation Protocol demos:

Approach

ASIC takes a three-way approach to the alignment problem by bridging engineering with data science and governance. View our design:

Community

We are researchers driving safe AI with roots at Data Science Reality – an education hub with over 170k followers on Linkedin. View our raison d’être: